The new concept of the Database Availability Group (DAG) is exciting Exchange 2010 technology to bring low cost high availability without costly hardware SAN infrastructure.

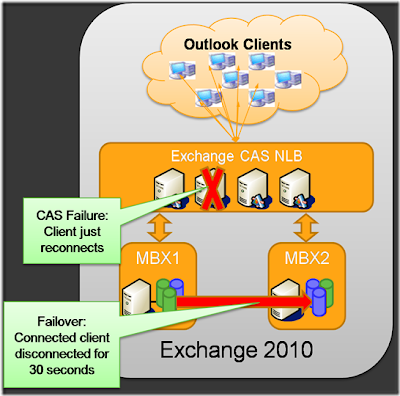

Microsoft Exchange Server 2010 clients will connect to Client Access Servers, which will proxy the requests to the client. No more LCR, SCR, or CCR…DAG (or Super CCR) uses low cost DAS storage to leverage a “Raid 5” striping of databases to multiple servers. Client Access Servers (set in load balanced server farms), will provide primary HTTP and a new “distributed RPC endpoint” for Office 2010, Office 2007, Office 2003 emulation of a “standard exchange mailbox server” without needing to upgrade the clients.

Since clients connect to the CAS servers to proxy requests to the mailbox servers, failover from mailbox server to another in the DAG happen in less than 30 seconds in a failover or move command.

Some other notable highlights in Exchange 2010 database and HA architecture:

- Replication between databases will change from being a RPC method, to a TCP socket method which will increase performance on heavily loaded servers.

- Replication can be locally or remote (cross-subnet). You will need CAS servers at the DR site however if you lose the primary datacenter.

- You can have upto 16 mailbox servers in a DAG.

- There will be no integration with Microsoft Online at the DAG level. Microsoft Online cannot be used as DR site for a on-premise hosted mailbox. Either it’s on-premise or hosted, not a mixture of the two.

- You still need Windows Server 2008 Enterprise, as failover clustering feature is required.

- The concept of Storage groups is depreciated.

- Jet is still the storage engine for Exchange 2010 databases.

- Exchange IO has been reduced 50% from 2007 to 2010 (and already a 70% IO reduction from Exchange 2003 to 2007).

- Single Instance Storage is going away, as well as the per database table. A new table is created for each mailbox, creating the scenario for 10,000+ messages in mailboxes due to the sequential read capability.

- Server based PST files allow archiving with anywhere access. Help for e-discovery, OWA searches, and compliance management.

Public folders are not covered by the new DAG changes, and the only way to replicate Public Folders in Exchange 2010 is using the same 10 year old Public folder replication methods we have used for years. SCR replication of the public folder database for DR scenarios, possible in Exchange 2007, is depreciated in Exchange 2010. Also, clients will continue to connect to public folder on mailbox servers in the DAG directly. Public Folders will not take part in the new Client Access Server 2010 model that is introduced with Exchange 2010 mailbox databases. Public folders are a legacy platform and significant changes won’t be introduced.

What has been removed?

No more EVS/CMS

Database is no longer associated to a Server but is an Org Level resource

There is no longer a requirement to choose Cluster or Non Cluster at installation, an Exchange 2010 server can move in and out of a DAG as needed

The limitation of only hosting the mailbox role on a clustered Exchange server

Storage Groups have been removed from Exchange

Is anything the same?

1. Window Enterprise Edition is still required since a DAG still uses pieces of Windows Failover Clustering

What’s New?

1. Other roles can be installed on the mailbox server when it is a member of a DAG

2. A database name must be unique in the Exchange Org

DAG is a collection of Exchange 2010 Mailbox Servers (maximum 16) who help monitor and protect mailbox databases defined within the DAG. Gone are LCR and SCC and still there and evolved are SCR and CCR both now known as DAG.

One major change when it comes to clustering is that you can have any combination of Exchange 2010 roles on your server. So if you want to create an Exchange 2010 cluster based on 2 Servers you could do so if you want. Even the UM role is fine with it. The Edge Transport role is the only exception to this rule but so it also lives it´s own life in the DMZ.

Another cool feature of DAG is that you don´t have to create Windows Failover Cluster, WFC, beforehand. This also means that you don´t risk ending up with a hard coded cluster node but instead you´re free to remove it from the DAG and end up with a regular Mailbox Server role.

When you add the first Exchange Server to the DAG it senses if there is an underlying cluster or not. If it doesn´t find any cluster it automatically installs and configures WFC for you.

One interesting thing to notice is that every time the number of Mailbox Servers within the DAG makes an even number (2,4,6,..) the File Share Witness, FSW, is in the mix within the cluster but as soon as you have an odd number of servers it´s gone. Well, it isn´t deleted from the hard drive, just not a part of the cluster anymore. Its not that FSW would appear after the very first Server added to the DAG. When a DAG is formed, it will initially use the Node Majority quorum mode. When the second Mailbox server is added to the DAG, the quorum is automatically changed to a Node and File Share Majority quorum model. When this change occurs, the DAG will begin using the witness server for maintaining quorum. If the witness directory does not exist, Exchange will automatically create it, and provision it with full control permissions for local administrators and the cluster network object (CNO) computer account for the DAG.

For a CLI style, follow these steps:

1. Install your forthcoming nodes as you would any other Exchange 2010 Server

2. Then set your cluster NICs on their own subnet and all other things we normally configure on cluster NICs: Recommended private "Heartbeat" configuration on a cluster server - http://support.microsoft.com/default.aspx/kb/258750

3. Create the DAG

Example: New-DatabaseAvailabilityGroup -Name DAG1 -FileShareWitnessShare \\EXHUB1\DAG1FSW -FileShareWitnessDirectory C:\DAG1FSW

4. Create the Networks for the DAG. Minimum of 2;

one public for client connections

Example: New-DatabaseAvailabilityGroupNetwork -DatabaseAvailabilityGroup DAG1 -Name DAGPUB -Description "Public Client traffic network" -Subnets 192.168.0.0/24 -ReplicationEnabled:$False

and one for dedicated replication traffic.

Example: New-DatabaseAvailabilityGroupNetwork -DatabaseAvailabilityGroup DAG1 -Name DAGCLU -Description "Replication network" -Subnets 10.0.0.0/8 -ReplicationEnabled:$True

5. Then add your nodes:

Add-DatabaseAvailabilityGroupServer -Identity DAG1 -MailboxServer EXMBX1 -DatabaseAvailablityGroupIpAddresses 192.168.0.25

Remember that you have to do this on the nodes directly if WFC isn´t already on the machine since it isn´t possible to install FWC remotely. BUT if you already have installed WFC AND you have cluster admin tool installed on the machine you´re sitting at, then you can perform this from that machine.

Also remember to add -DatabaseAvailablityGroupIpAddresses if you don´t have any DHCP or if you want to staticly set an IP Address to the DAG.

6. Add the database that DAG should protect:

Add-MailboxDatabaseCopy -Identity MBXDB1 -MailboxServer EXMBX3 -ReplayLagTime 00:10:00 -TruncationLagTime 00:15:00 -ActivationPreference 2

Now you have your very own DAG running!

Basic test of functionality: Try a Switchover (basically what you do when performing maintenance on the Server holding the currently active database copy): Move-ActiveMailboxDatabase "Executive Database" -ActivateOnServer EXMBX3 -MountDialOverride:None.

----------------------------------------------------------------------------------------